Testing a movingtarget_quest_dynatrace

- 1. Confidential, Dynatrace LLC Testing a Moving Target How Do We Test Machine Learning Systems? Peter Varhol, Dynatrace LLC

- 2. About me • International speaker and writer • Degrees in Math, CS, Psychology • Evangelist at Dynatrace • Former university professor, tech journalist

- 3. • What kind of systems produce nondeterministic results • Why we can’t test these systems using traditional techniques • How we can assess, measure, and communicate quality with learning and adaptive systems Confidential, Dynatrace LLC What You Will Learn

- 4. Agenda • What are machine learning and adaptive systems? • How are these systems evaluated? • Challenges in testing these systems • What constitutes a bug? • Summary and conclusions

- 5. We Think We Know Testing • We test deterministic systems • For a given input, the output is always the same • And we know what the output is supposed to be • If the output is something else • We may have a bug • We know nothing

- 6. Machine Learning and Adaptive Systems • We are now building a different kind of software • It never returns the same result • That doesn’t make it wrong • How can we assess the quality? • How do we know if there is a bug?

- 7. • The problem domain is ambiguous • There is no single “right” answer • “Close enough” is good • We don’t know quite why the software responds as it does • We can’t easily trace code paths Confidential, Dynatrace LLC How Does This Happen?

- 8. What Technologies Are Involved? • Neural networks • Genetic algorithms • Rules engines • Feedback mechanisms • Sometimes hardware

- 9. Neural Networks • Set of layered algorithms whose variables can be adjusted via a learning process • The learning process involves training with known inputs and outputs • The algorithms adjust coefficients to converge on the correct answer (or not) • You freeze the algorithms and coefficients, and deploy

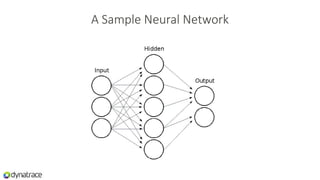

- 10. A Sample Neural Network

- 11. • Use the principle of natural selection • Create a range of possible solutions • Try out each of them • Choose and combine two of the better alternatives • Rinse and repeat as necessary Confidential, Dynatrace LLC Genetic Algorithms

- 12. • Layers of if-then rules, with likelihoods associated • With complex inputs, the results can be different • Determining what rules/probabilities should be changed is almost impossible • How do we measure quality? Confidential, Dynatrace LLC Rules Engines

- 13. • Transportation • Self-driving cars • Aircraft • Ecommerce • Recommendation engines • Finance • Stock trading systems Confidential, Dynatrace LLC How Are These Systems Used?

- 14. • Electric wind sensor • Determines wind speed and direction • Based on the cooling of filaments • Several hundred data points of known results • Designed a three-layer neural network • Then used the known data to train it Confidential, Dynatrace LLC A Practical Example

- 15. • Retail recommendation engines • Other people bought this • You may also be interested in that • They don’t have to be perfect • But they can bring in additional revenue Confidential, Dynatrace LLC Another Practical Example

- 16. Challenges to Validating Requirements • What does it mean to be correct? • The result will be different every time • There is no one single right answer • How will this really work in production? • How do I test it at all?

- 17. • Only look at outputs for given inputs • And set accuracy parameters • Don’t look at the outputs at all • Focus on performance/usability/other features • We can’t test accuracy • Throw up our hands and go home Confidential, Dynatrace LLC Possible Answers

- 18. Testing Machine Learning Systems • Have objective acceptance criteria • Test with new data • Don’t count on all results being accurate • Understand the architecture of the network as a part of the testing process • Communicate the level of confidence you have in the results to management and users

- 19. What About Adaptive Systems? • Adaptive systems are very similar to machine learning • The problems solved are slightly different • Neural algorithms are used, and trained • But the algorithms aren’t frozen in production

- 20. Machine Learning and Adaptive Systems • These are two different things • Machine learning systems get training, but are static after deployment • Adaptive systems continue to adapt in production • They dynamically optimize • They require feedback

- 21. • Airline pricing • Ticket prices change three times a day based on demand • It can cost less to go farther • It can cost less later • Ecommerce systems • Recommendations try to discern what else you might want • Can I incentivize you to fill up the plane? Confidential, Dynatrace LLC Adaptive Systems

- 22. • Brooks Ghost running shoes • Versus ghost costumes • We don’t take context into account • But do they make money? • Well, probably Confidential, Dynatrace LLC Recommendation Engines Can Be Very Wrong

- 23. Considerations for Testing Adaptive Systems • You need test scenarios • Best case, average case, and worst case • You will not reach mathematical optimization • Determine what level of outcomes are acceptable for each scenario • Defects will be reflected in the inability of the model to achieve goals

- 24. What Does Being Correct Mean? • Are we making money? • Is the adaptive system more efficient? • Are recommendations being picked up? • Is it worthwhile to test recommendations? • How would you score that?

- 25. • We have never tested these characteristics before • Can we learn? • How to we make quality recommendations? • Consistency? • Value? • Does it matter? Confidential, Dynatrace LLC These Are Very Different Measures

- 26. • I will never encounter this type of application! • You might be surprised • I will do what I’ve always done • Um, no you won’t • My goals will be defined by others • Unless they’re not • You may be the one Confidential, Dynatrace LLC Objections

- 27. How Do We Test These Things? • Multiple inputs at one time • Inputs may be ambiguous or approximate • The output may be different each time • Testing accuracy is a fool’s game • Past data • We know how different pricing strategies turned out • We made recommendations in the past

- 28. What is a Bug? • A mismatch between inputs and outputs? • It supposed to be that way! • Not every recommendation will be a good one • But that doesn’t mean it’s a bug • Too many wrong answers • Define too many

- 29. We Found a Bug, Now What? • The bug could be unrelated to the neural network • Treat it as a normal bug • If the neural network is involved • Determine a definition of inaccurate • Determine the likelihood of an inaccurate answer • This may involve serious redevelopment

- 30. • We have little experience with learning and adaptive systems • Requirements have to be very different • We need to understand the difference between correct and accurate • We need objective requirements • And the ability to measure them • And the ability to communicate what they mean Confidential, Dynatrace LLC Conclusions

- 31. Thank You Peter Varhol Dynatrace LLC peter.varhol@Dynatrace.com

Editor's Notes

- #6: Software in theory is a fairly straightforward activity. For every input, there is a defined and known output. We enter values, make selections, or navigate an application, and compare the actual result with the expected one. If they match, we nod and move on. If they don’t, we possibly have a bug. The point is that we already know what the output is supposed to be. Granted, sometimes an output is not well-defined, and there can be some ambiguity, and you get disagreements on whether or not a particular result represents a bug or something else.

- #7: But there is a type of software where having a defined output is no longer the case. Actually, two types. One is machine learning systems. The second is predictive analytics, or adaptive systems.

- #10: Most machine learning systems are based on neural networks. A neural network is a set of layered algorithms whose variables can be adjusted via a learning process. The learning process involves using known data inputs to create outputs that are then compared with known results. When the algorithms reflect the known results with the desired degree of accuracy, the algebraic coefficients are frozen and production code is generated. Today, this comprises much of what we understand as artificial intelligence.

- #14: These types of software are becoming increasingly common, in areas such as ecommerce, public transportation, automotive, finance, and computer networks. They have the potential to make decisions given sufficiently well-defined inputs and goals. In some instances, they are characterized as artificial intelligence, in that they seemingly make decisions that were once the purview of a human user or operator. Decision augmentation Personal assistants

- #15: Both of these types of systems have things in common. For one thing, neither produces an “exact” result. In fact, sometimes they can produce an obviously incorrect result. But they are extremely useful in a number of situations when data already exists on the relationship between recorded inputs and intended results. Let me give you an example. Years ago, I devised a neural network that worked as a part of an electronic wind sensor. This worked though the wind cooling the electronic sensor based on its precise decrease in temperature at specific speeds and directions. I built a neural network that had three layers of algebraic equations, each with four to five separate equations in individual nodes, computing in parallel. They would use starting coefficients, then adjust those coefficients based on a comparison between the algorithmic output and the actual answer. I then trained it. I had over 500 data points regarding known wind speed and direction, and the extent to which the sensor cooled. The network I created passed each input into its equations, through the multiple layers, and produced an answer. At first, the answer from the network probably wasn’t that close to the known correct answer. But the algorithm was able to adjust itself based on the actual answer. After multiple iterations with the training data, the coefficients should gradually hone in on accurate and consistent results.

- #17: How do you test this? You do know what the answer is supposed to be, because you built the network using the test data, but it will be rare to get an exactly correct answer all of the time. The product is actually tested during the training process. Training either brings convergence to accurate results, or it diverges. The question is how you evaluate the quality of the network.

- #19: Have objective acceptance criteria. Know the amount of error you and your users are willing to accept. Test with new data. Once you’ve trained the network and frozen the architecture and coefficients, use fresh inputs and outputs to verify its accuracy. Don’t count on all results being accurate. That’s just the nature of the beast. And you may have to recommend throwing out the entire network architecture and starting over. Understand the architecture of the network as a part of the testing process. Few if any will be able to actually follow a set of inputs through the network of algorithms, but understanding how the network is constructed will help testers determine if another architecture might produce better results. Communicate the level of confidence you have in the results to management and users. Machine learning systems offer you the unique opportunity to describe confidence in statistical terms, so use them. One important thing to note is that the training data itself could well contain inaccuracies. In this case, because of measurement error, the recorded wind speed and direction could be off or ambiguous. In other cases, the cooling of the filament likely has some error in its measurement.

- #21: Another type of network might be termed predictive analytics, or adaptive systems. These systems continue to adapt after deployment, using a feedback loop to adjust variables and coefficients within the algorithm. They learn while in production use, and by having certain measureable goals, are able to adjust aspects of their algorithms to better reach those goals. One example is a system under development in the UK to implement demand-based pricing for train service. Its goal is to try to encourage riders to use the train during non-peak times, and dynamically adjusts pricing to make it financially attractive for riders to consider riding when the trains aren’t as crowded. This type of application experiments with different pricing strategies and tries to optimize two different things – a balance of the ridership throughout the day, and acceptable revenue from ridership. A true mathematical optimization isn’t possible, but the goal is to reach a state of spread-out ridership and revenue that at least covers costs.